Overview

Codeducator is a desktop address book application for private programming language tutors.

Users are able to track the progress of their students, manage their tutoring schedule and other important information about their students.

Codeducator has a graphical user interface built with JavaFX but most of the user interactions are done using command line interface. It is written in Java and has about 10 kLoC.

This project is based on the AddressBook-Level4 created by the SE-EDU initiative.

Summary of contributions

-

Major enhancement: Implementation of Natural Language Processing (NLP) in CLI

-

What it does: It allows the user to enter conversational English into the command line and the intention of the input will be deciphered and the corresponding command will be called.

-

Justification: New users of Codeducator might find themselves constantly referring to the help document often, hence this enhancement is to allow intuitive usage of the commands.

-

Credits: The above-mentioned enhancement is implemented using IBM Watson™ Assistant service and the relevant methods documented in the API.

-

-

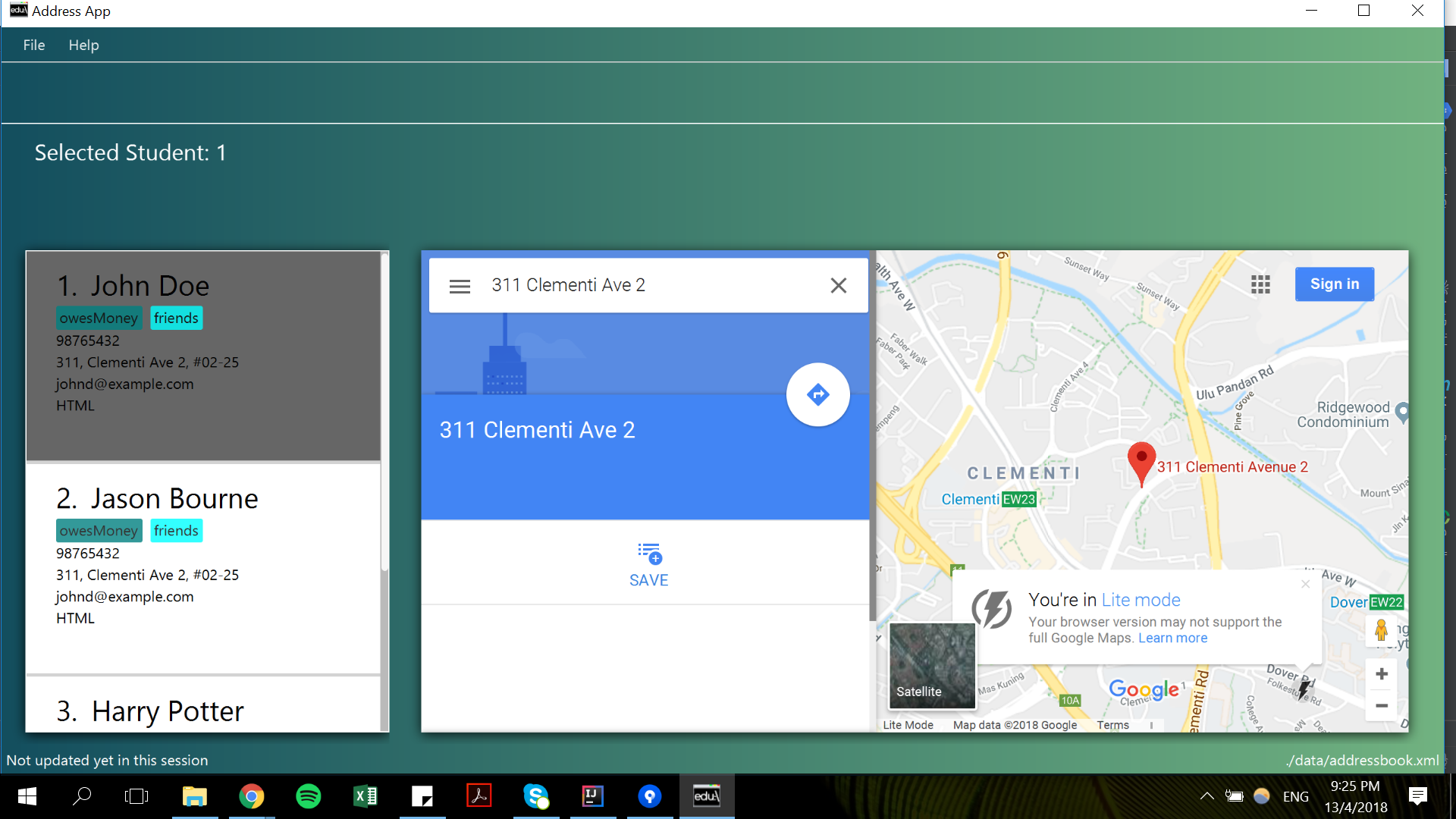

Minor enhancement: Rendering of a student’s address on Google Maps upon selection

-

What it does: A Google Maps page with the selected student’s address will be shown in the embedded brower upon selection of the student

-

Justification: For ease of navigation planning to the student’s house, the tutor would have the required information simply by just

Selecting the student.

-

-

Code contributed: [Functional code] [Test code]

-

Other contributions:

-

Enhancements to existing features:

-

Improved the

selectcommand to show the location of the student on Google Maps

-

-

Documentation:

-

Proofreading the User Guide and making alterations to make it more personal to the user.

-

-

Tools:

-

Integrated a third party library (IBM Watson™ Assistant service) to the project (https://github.com/CS2103JAN2018-W09-B3/main/issues/#24)

-

-

Contributions to the User Guide

Given below are sections I contributed to the User Guide. They showcase my ability to write documentation targeting end-users. |

Interacting using free-form English

CodEducator also allows you to use features of the applications using everyday english sentences, without the need to remember specific command words.

| An active internet connection is required for this to work. |

How do i use this?

You can refer to the table below to see which are the features that you are able to invoke using conversational English.

| The examples below are just for your reference. Any phrase or sentence can be used, so long as your intention is precise and clear. |

| Command | Examples | |

|---|---|---|

|

|

"Empty everything" |

|

|

"reuse previous" |

|

|

"negate the previous action" |

|

|

"I need assistance" |

|

|

"i wish to quit" |

|

|

"archives of commands entered" |

|

|

"enumerate everyone" |

|

|

"show me my timetable" |

|

|

"Remove Jason" |

|

|

"Single out Jason" |

Currently, the commands Delete and Select only detects English names!

|

.png)

.png)

select command is successfulGuidelines for using this feature

-

Be sure to check for spelling errors in your sentences.

| Spelling errors can be mis-interpreted and the wrong command might be invoked. |

-

Be as precise as possible in specifying your intentions in the sentences, to prevent mis-interpretation of commands.

-

If the wrong feature is invoked, you can always use the

undocommand revert any undesired changes.

Contributions to the Developer Guide

Given below are sections I contributed to the Developer Guide. They showcase my ability to write technical documentation and the technical depth of my contributions to the project. |

Location feature

Selecting a student using the select command will render their location on google maps.

Current Implementation

The address of the student is extracted and converted in a string to be appended to the end of the SEARCH_PAGE_URL in the following function

private void loadStudentPage(Student student) {

Address location = student.getAddress();

String append = location.urlstyle();

loadPage(SEARCH_PAGE_URL + append);

}

An example is provided below when select 1 is entered as a command:

Design Considerations

| Aspect | Alternatives | Pros (+)/ Cons(-) |

|---|---|---|

Implementation of displaying student locations |

Display it on the embedded browser (current choice) |

+ : Easy to implement, simply alter the default webpage |

Creating a new window to display the location |

+ : This would allow concurrent display of locations of many students |

Natural Language Processing capabilities

Allows users to invoke features using free-form english, apart from keywords specific to each feature.

Current implementation

An AI bot(agent) that is trained to process sentences based on the features integrated is into the application. Its primary goal is to identify the user’s intention of the input and match it with a corresponding feature which the user wishes to use.

A new class ConversationCommand is written to process sentences that do not match the syntax of any features.

Making API calls via the REST API

The method below, written using the IBM Watson™ Assistant service API, is used to make the API call to the agent.

The userInput field contains the sentence that the user inputs.

public static MessageResponse getMessageResponse(String userInput) {

MessageResponse response = null;

InputData input = new InputData.Builder(userInput).build();

MessageOptions option = new MessageOptions.Builder("19f7b6f4-7944-419d-83a0-6bf9837ec333").input(input).build();

response = service.message(option).execute();

return response;

}

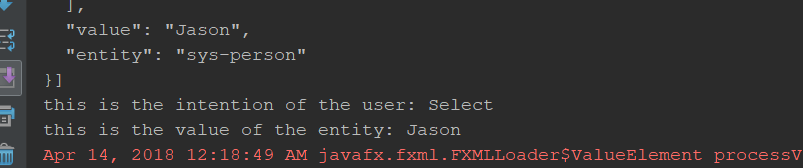

Intents and Entities

Intents refers to the intention behind the input of the user and entities refer to objects of interest e.g. name, address, location

The agent is integrated into the AddressBookParser class and the following code snippet deciphers the intents

and the entity embedded in the user’s input.

//processes the userInput

response = ConversationCommand.getMessageResponse(userInput);

intents = response.getIntents();

entities = response.getEntities();

for (int i = 0; i < intents.size(); i++) {

intention = intents.get(i).getIntent();

}

if (entities.size() != 0) {

for (int i = 0; i < intents.size(); i++) {

entity = entities.get(i).getValue();

}

}

| Every single input always has an intent, but that is not the case for entities! |

For further clarification, refer to the screenshot below:

Select and the entity refers to the name of a person, which takes on a value of Jason in this particular case.Matching the desired command

After identifying the intent and entities (if present) in the user’s input, the corresponding features matching intent is called, passing any entities as parameters to the feature’s method.

.png)

select command is invoked, passing Jason as a parameter, after the intent and entity is identified.Design Consideration

| Aspect | Alternatives | Pros (+)/ Cons(-) |

|---|---|---|

Aspect: Selection of an appropriate third-party APIs to implement NLP |

IBM Watson™ Assistant service (current choice) |

+ : Offers extensive NLP functions which are easy to implement. User-friendly interface allows ease of training of the model, which has high scalability |

Stanford CoreNLP |

+ : Possesses a powerful and comprehensive API, comprising of a set of stable and well-tested natural language processing tools, widely used by various groups in academia, industry, and government. |

|

Google Cloud Natural Language |

+ : Offers a variety of functions (Syntax Analysis, Entity Recognition, Sentiment Analysis etc.) and integrates REST API,

powerful enough to analyse texts properly and texts can be uploaded in the request or integrated with Google Cloud Storage. |